Amazon and MIT announce Science Hub gift project awards

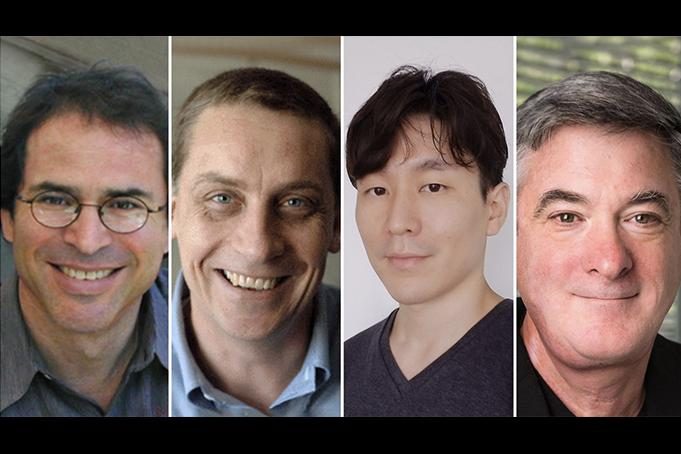

Four MIT professors are the recipients of the inaugural call for research projects.

Amazon and MIT today named the four awardees from the inaugural call for research projects as part of the Science Hub.

The Science Hub — which was launched in October 2021 and is administered at MIT by the Schwarzman College of Computing — supports research, education, and outreach efforts in areas of mutual interest, beginning with artificial intelligence and robotics in the first year.

The research projects dovetail with the hub’s themes of ensuring the benefits of AI and robotics innovations are shared broadly as well as broadening participation in the research from diverse, interdisciplinary scholars.

“We are excited to support these research projects through the Science Hub,” said Jeremy Wyatt, senior manager applied science at Amazon Robotics. “Each project addresses a hard research problem that requires exploratory work. By supporting this work Amazon gives back to the robotics science community and helps to develop the next generation of researchers.”

“Our researchers are working at the intersection of autonomous mobile robots, optimization, control, and perception,” says Aude Oliva, director of strategic industry engagement in the MIT Schwarzman College of Computing and the principal investigator for the Science Hub. “We hope that this intersectional approach will result in technology that brings state-of-the-art robotics to homes around the world.”

The four projects were selected by a committee comprised of members from both MIT and Amazon. What follows are this year’s recipients, their research projects, and what they’re proposing.

Edward Adelson, John and Dorothy Wilson Professor of Vision Science, “Multimodal Tactile Sensing”

“We propose to build the best tactile sensor in existence. We already can build robot fingers with extremely high spatial acuity (GelSight), but these fingers lack two important aspects of touch: temperature and vibration. We will incorporate thermal sensing with an array of liquid crystal dots covering the skin; the camera will infer the distribution of temperature from the colors. For vibrations, we will try various sensing methods, including IMU’s, microphones, and mouse cameras.”

Jonathan How, Richard Cockburn Maclaurin Professor of Aeronautics and Astronautics, “Safety and Predictability in Robot Navigation for Last-mile Delivery Safety and Predictability in Robot Navigation for Last-mile Delivery”

“Economically viable last-mile autonomous delivery requires robots that are both safe and resilient in the face of previously unseen environments. With these goals in mind, this project aims to develop algorithms to enhance the safety, availability, and predictability of mobile robots used for last-mile delivery systems. One goal of the work is to extend our state-of-the-art navigation algorithms (based on reinforcement learning (RL) and model-predictive control (MPC) to incorporate uncertainties induced by the real world (e.g., imperfect perception, heterogeneous pedestrians), which will enable the learning of cost-to-go models that more accurately predict the time it might take the robot to reach its goal in a real-world scenario. A second goal of the work is to provide formal safety guarantees for AI-based navigation algorithms in dynamic environments, which will involve extending our recent neural network verification algorithms that compute reachable sets for static obstacle avoidance.”

Yoon Kim, assistant professor of electrical engineering and computer science, “Efficient training of large language models”

“Large language models have been shown to exhibit surprisingly general-purpose language capabilities. Critical to their performance is the sheer scale at which they are trained, with continuous improvements coming from increasingly larger models. However, training such models from scratch is extremely resource- intensive. This proposal aims to investigate methods for more efficiently training large language models by leveraging smaller language models that have already been pretrained—for example, how can we use GPT-3 to more efficiently train GPT-4? In particular, we propose to exploit the linear algebraic structure of smaller, pretrained networks to better initialize and train the parameters of larger networks.”

Joseph Paradiso, Alexander W. Dreyfoos Professor of Media Arts and Sciences, “Soft artificial intelligent skin for tactile-environmental sensing and human-robot interaction”

“Multimodal sensory information can help robots to interact more safely, effectively, and collaboratively with humans. Artificial skin with very compact electronics integration that can be wrapped onto any arbitrary surface, including a robot skin, will need to be realized. Herewith, we propose a bio-inspired, high-density sensor array made on a soft deformable substrate for large-scale artificial intelligent skin. Each node contains a local microprocessor together with a collection of multiple sensors, providing multimodal data and self-aware capability that can be fused and used to localize and detect various deformation, proxemic, tactile, and environmental changes. We have developed a prototype that can simultaneously sense and respond to a variety of stimuli. This enables our proposed research effort to scale and fabricate a dense artificial skin and apply various self-organizing networking and novel sensing techniques for large-scale interaction, localization, and visualization with applications in robotics, human-machine interfaces, indoor/environmental sensing, and cross-reality immersion.”