Addressing the promises and challenges of AI

Final day of the MIT Schwarzman College of Computing celebration explores enthusiasm, caution about AI’s rising prominence in society.

A three-day celebration event this week for the MIT Stephen A. Schwarzman College of Computing put focus on the Institute’s new role in helping society navigate a promising yet challenging future for artificial intelligence (AI), as it seeps into nearly all aspects of society.

On Thursday, the final day of the event, a series of talks and panel discussions by researchers and industry experts conveyed enthusiasm for AI-enabled advances in many global sectors, but emphasized concerns — on topics such as data privacy, job automation, and personal and social issues — that accompany the computing revolution. The day also included a panel called “Computing for the People: Ethics and AI,” whose participants agreed collaboration is key to make sure artificial intelligence serves the public good.

Kicking off the day’s events, MIT President Rafael Reif said the MIT Schwarzman College of Computing will train students in an interdisciplinary approach to AI. It will also train them to take a step back and weigh potential downsides of AI, which is poised to disrupt “every sector of our society.”

“Everyone knows pushing the limits of new technologies can be so thrilling that it’s hard to think about consequences and how [AI] too might be misused,” Reif said. “It is time to educate a new generation of technologists in the public interest, and I’m optimistic that the MIT Schwarzman College [of Computing] is the right place for that job.”

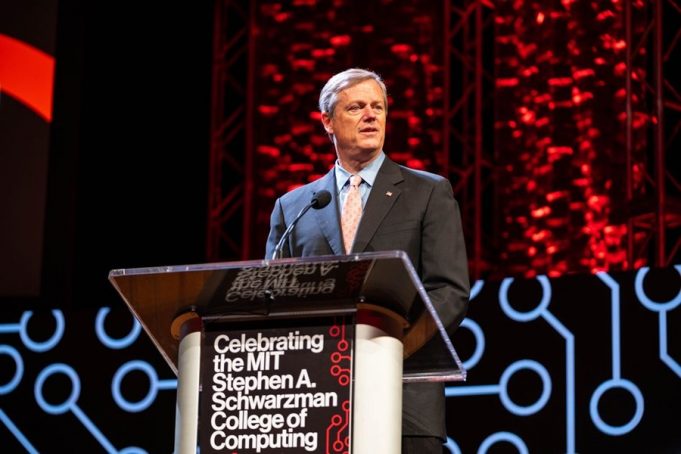

In opening remarks, Massachusetts Governor Charlie Baker gave MIT “enormous credit” for focusing its research and education on the positive and negative impact of AI. “Having a place like MIT … think about the whole picture in respect to what this is going to mean for individuals, businesses, governments, and society is a gift,” he said.

Personal and industrial AI

In a panel discussion titled, “Computing the Future: Setting New Directions,” MIT alumnus Drew Houston ’05, co-founder of Dropbox, described an idyllic future where by 2030 AI could take over many tedious professional tasks, freeing humans to be more creative and productive.

Workers today, Houston said, spend more than 60 percent of their working lives organizing emails, coordinating schedules, and planning various aspects of their job. As computers start refining skills — such as analyzing and answering queries in natural language, and understanding very complex systems — each of us may soon have AI-based assistants that can handle many of those mundane tasks, he said.

“We’re on the eve of a new generation of our partnership with machines … where machines will take a lot of the busy work so people can … spend our working days on the subset of our work that’s really fulfilling and meaningful,” Houston said. “My hope is that, in 2030, we’ll look back on now as the beginning of a revolution that freed our minds the way the industrial revolution freed our hands. My last hope is that … the new [MIT Schwarzman College of Computing] is the place where that revolution is born.”

Speaking with reporters before the panel discussion “Computing for the Marketplace: Entrepreneurship and AI,” Eric Schmidt, former executive chairman of Alphabet and a visiting innovation fellow at MIT, also spoke of a coming age of AI assistants. Smart teddy bears could help children learn language, virtual assistants could plan people’s days, and personal robots could ensure the elderly take medication on schedule. “This model of an assistant … is at the basis of the vision of how people will see a difference in our lives every day,” Schmidt said.

He noted many emerging AI-based research and business opportunities, including analyzing patient data to predict risk of diseases, discovering new compounds for drug discovery, and predicting regions where wind farms produce the most power, which is critical for obtaining clean-energy funding. “MIT is at the forefront of every single example that I just gave,” Schmidt said.

When asked by panel moderator Katie Rae, executive director of The Engine, what she thinks is the most significant aspect of AI in industry, iRobot co-founder Helen Greiner cited supply chain automation. Robots could, for instance, package goods more quickly and efficiently, and driverless delivery trucks could soon deliver those packages, she said: “Logistics in general will be changed” in the coming years.

Finding an algorithmic utopia

For Institute Professor Robert Langer, another panelist in “Computing for the Marketplace,” AI holds great promise for early disease diagnoses. With enough medical data, for instance, AI models can identify biological “fingerprints” of certain diseases in patients. “Then, you can use AI to analyze those fingerprints and decide what … gives someone a risk of cancer,” he said. “You can do drug testing that way too. You can see [a patient has] a fingerprint that … shows you that a drug will treat the cancer for that person.”

But in the “Computing the Future” section, David Siegel, co-chair of Two Sigma Investments and founding advisor for the MIT Quest for Intelligence, addressed issues with data, which is at the heart of AI. With the aid of AI, Siegel has seen computers go from helpful assistants to “routinely making decisions for people” in business, health care, and other areas. While AI models can benefit the world, “there is a fear that we may move in a direction that’s far from an algorithmic utopia.”

Siegel drew parallels between AI and the popular satirical film “Dr. Strangelove,” in which an “algorithmic doomsday machine” threatens to destroy the world. AI algorithms must be made unbiased, safe, and secure, he said. That involves dedicated research in several important areas, at the MIT Schwarzman College of Computing and around the globe, “to avoid a Strangelove-like future.”

One important area is data bias and security. Data bias, for instance, leads to inaccurate and untrustworthy algorithms. And if researchers can guarantee the privacy of medical data, he added, patients may be more willing to contribute their records to medical research.

Siegel noted a real-world example where, due to privacy concerns, the Centers for Medicare and Medicaid Services years ago withheld patient records from a large research dataset being used to study substance misuse, which is responsible for tens of thousands of U.S. deaths annually. “That omission was a big loss for researchers and, by extension, patients,” he said. “We are missing the opportunity to solve pressing problems because of the lack of accessible data. … Without solutions, the algorithms that drive our world are at high risk of becoming data-compromised.”

Seeking humanity in AI

In a panel discussion earlier in the day, “Computing: Reflections and the Path Forward,” Sherry Turkle, the Abby Rockefeller Mauzé Professor of the Social Studies of Science and Technology, called on people to avoid “friction free” technologies — which help people avoid stress of face-to-face interactions.

AI is now “deeply woven into this [friction-free] story,” she said, noting that there are apps that help users plan walking routes, for example, to avoid people they dislike. “But who said a life without conflict … makes for the good life?” she said.

She concluded with a “call to arms” for the new college to help people understand the consequences of the digital world where confrontation is avoided, social media are scrutinized, and personal data are sold and shared with companies and governments: “It’s time to reclaim our attention, our solitude, our privacy, and our democracy.”

Speaking in the same section, Patrick H. Winston, the Ford Professor of Engineering at MIT, concluded on an equally humanistic — and optimistic — message. After walking the audience through the history of AI at MIT, including his run as director of the Artificial Intelligence Laboratory from 1972 to 1997, he told the audience he was going to discuss the greatest computing innovation of all time.

“It’s us,” he said, “because nothing can think like we can. We don’t know how to make computers do it yet, but it’s something we should aspire to. … In the end, there’s no reason why computers can’t think like we [do] and can’t be ethical and moral like we aspire to be.”